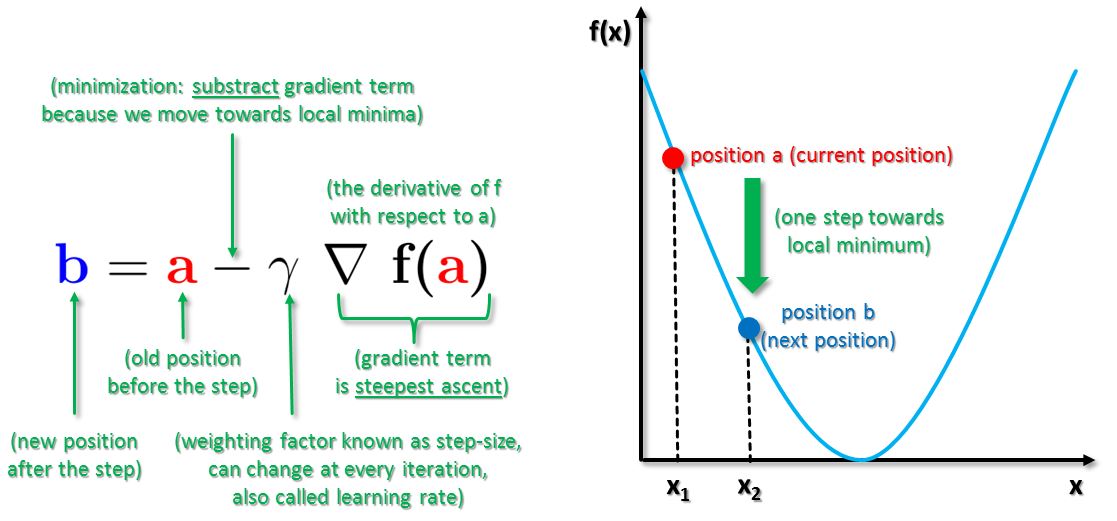

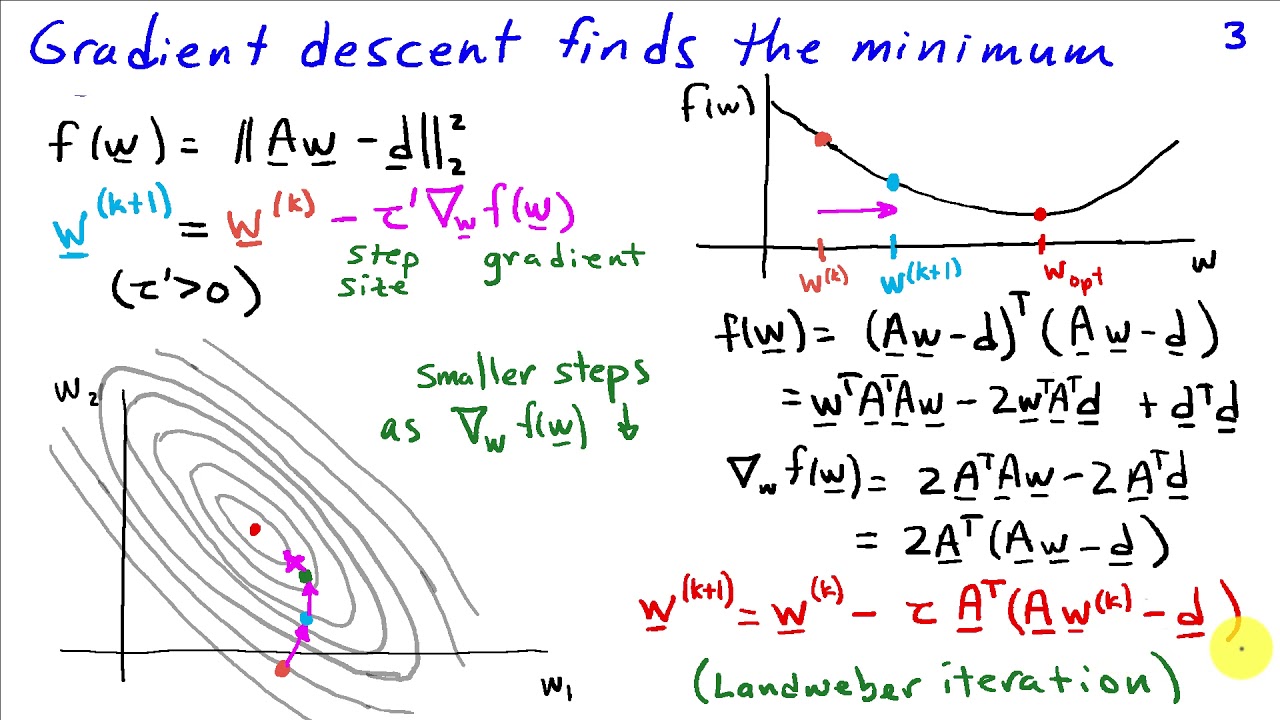

MathType - The #Gradient descent is an iterative optimization #algorithm for finding local minimums of multivariate functions. At each step, the algorithm moves in the inverse direction of the gradient, consequently reducing

Por um escritor misterioso

Descrição

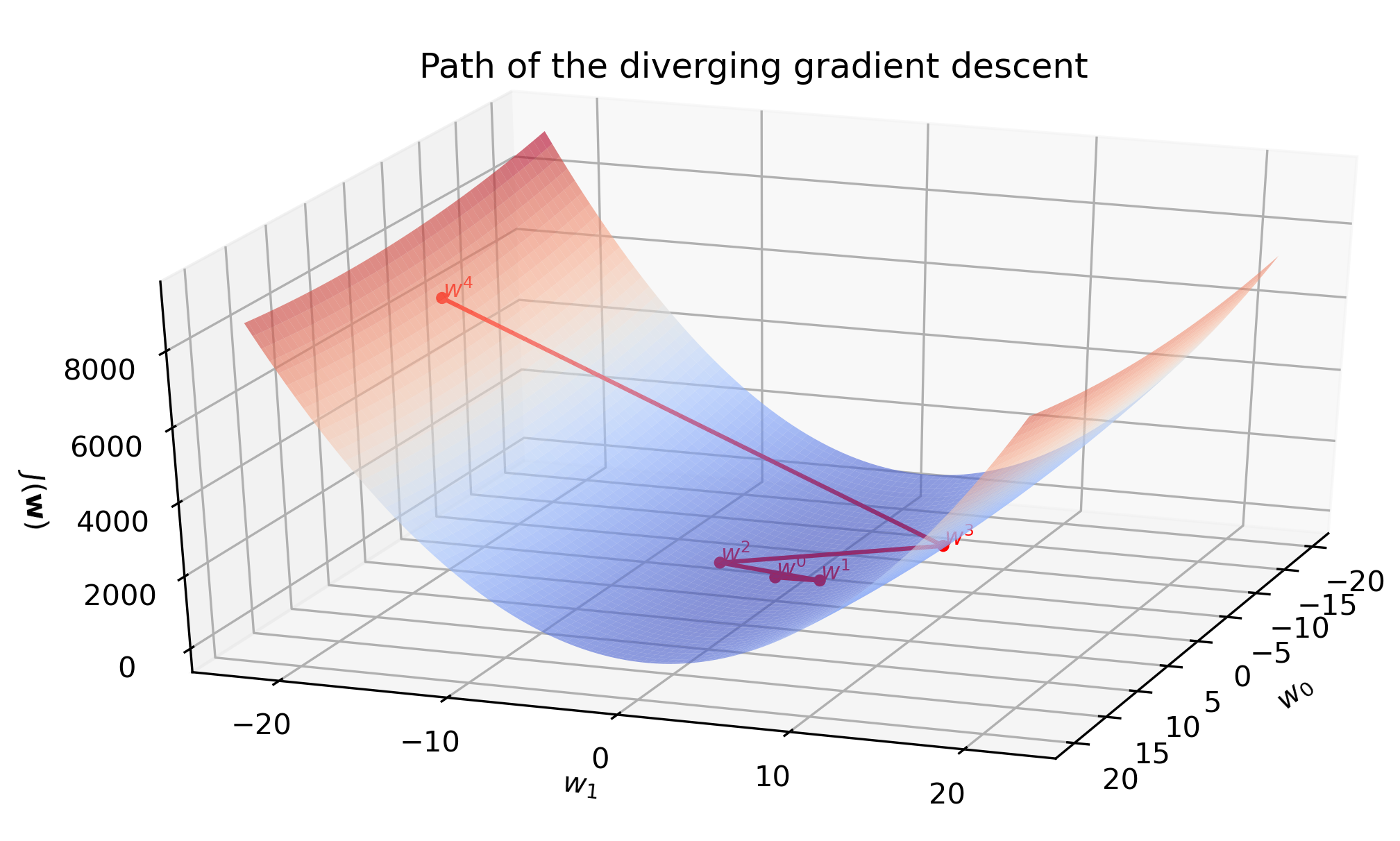

Gradient descent with linear regression from scratch in Python - Dmitrijs Kass' blog

Solved] . 4. Gradient descent is a first—order iterative optimisation

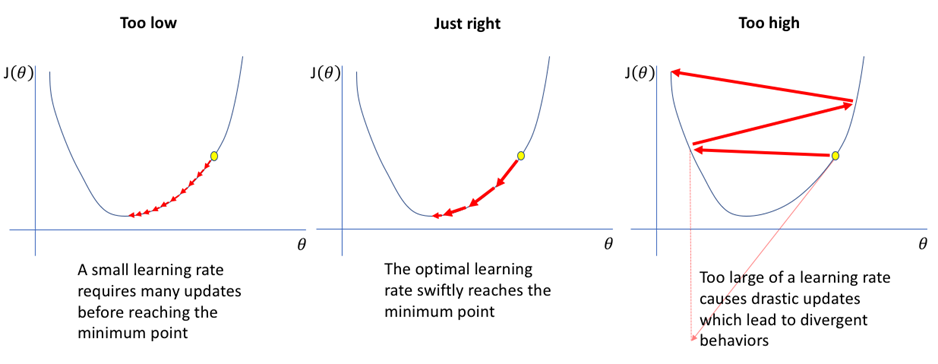

Optimization Techniques used in Classical Machine Learning ft: Gradient Descent, by Manoj Hegde

Gradient Descent Algorithm in Machine Learning - Analytics Vidhya

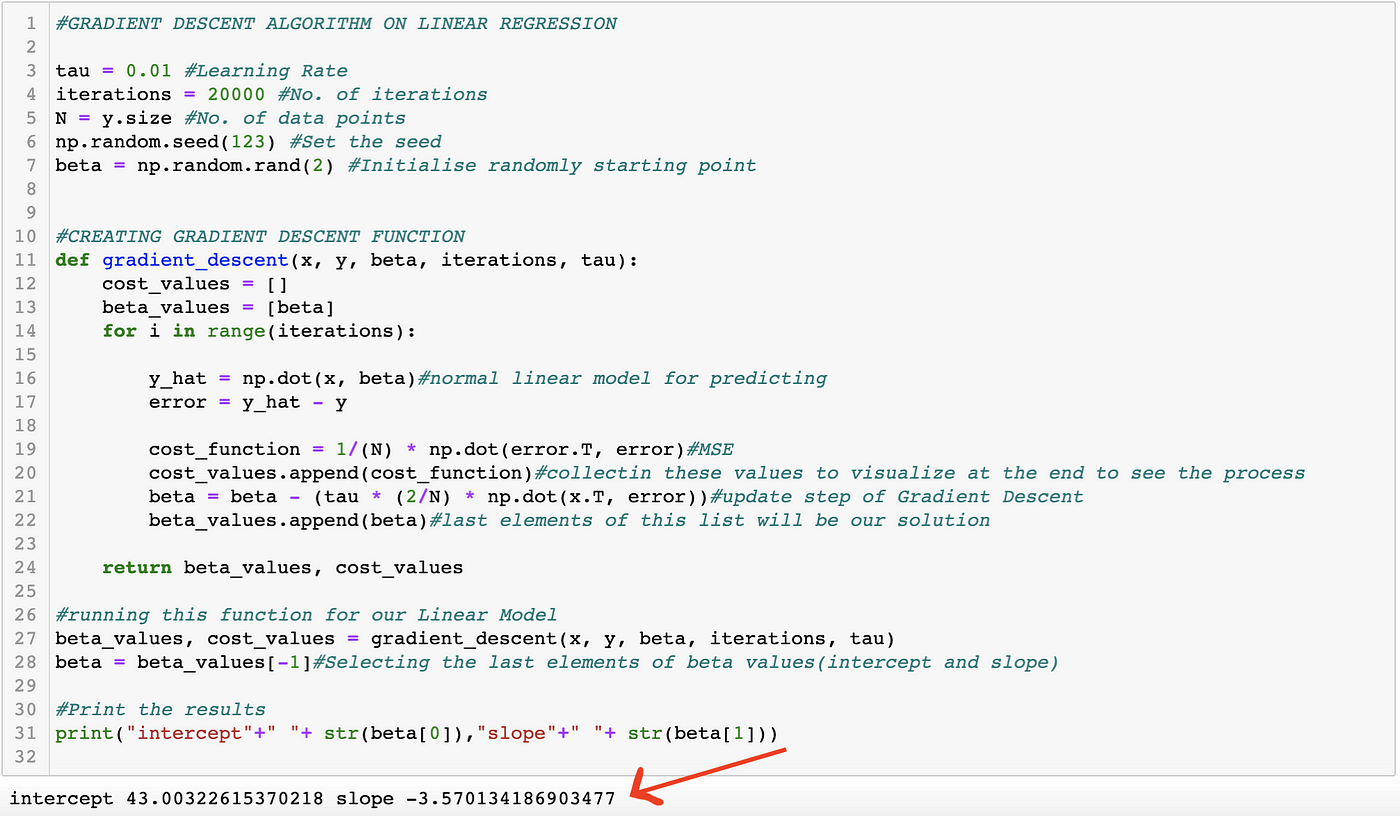

Explanation of Gradient Descent Optimization Algorithm on Linear Regression example., by Joshgun Guliyev, Analytics Vidhya

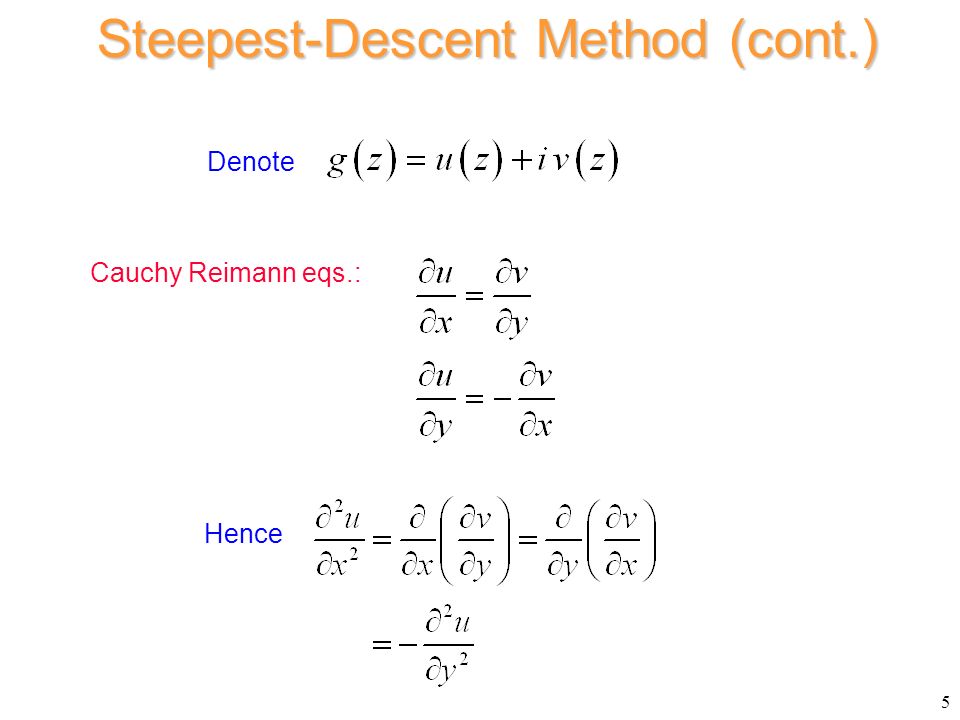

Gradient Descent Solutions to Least Squares Problems

A gradient descent algorithm finds one of the local minima. How do we find the global minima using that algorithm? - Quora

Task 1 Gradient descent algorithm: In this project

machine learning - Java implementation of multivariate gradient descent - Stack Overflow

de

por adulto (o preço varia de acordo com o tamanho do grupo)

.png)